How Cryptorefills Integrated MCP

Cryptorefills, is excited to share the announcement and the working update on our Model Context Protocol (MCP).

When we set out to build our Model Context Protocol (MCP) server, we wanted to ensure it worked not just in theory but in real AI-driven commerce flows. To do this, we developed our MCP layer on top of the Cryptorefills API and then tested it with AI agents in real purchase scenarios. We used these tests as a feedback loop to refine the server, fix edge cases, and ensure a smooth developer experience.

What is MCP?

The Model Context Protocol (MCP) is an emerging standard that lets AI systems connect directly to apps and services. So, instead of only answering questions, AI can fetch real data, test workflows, and trigger actions inside applications.

For Cryptorefills, this means our platform evolves from simply offering gift cards and mobile top-ups for crypto payments into a plug-and-play commerce layer that AI agents can use directly.

Why did we use AI agents to test the server?

To validate our MCP implementation, we needed to know how real AI models would interact with it. We chose FastAgent, which is an open framework for building AI agents, as our test client.

How does FastAgent work?

FastAgent provides a lightweight environment where you define tools (functions or APIs), connect them to an AI model, and let the agent plan and execute actions. The model reasons about the goal, calls the tools when needed, and iterates until the task is complete. This made it ideal for testing an MCP server, since each tool maps naturally to an MCP-exposed capability.

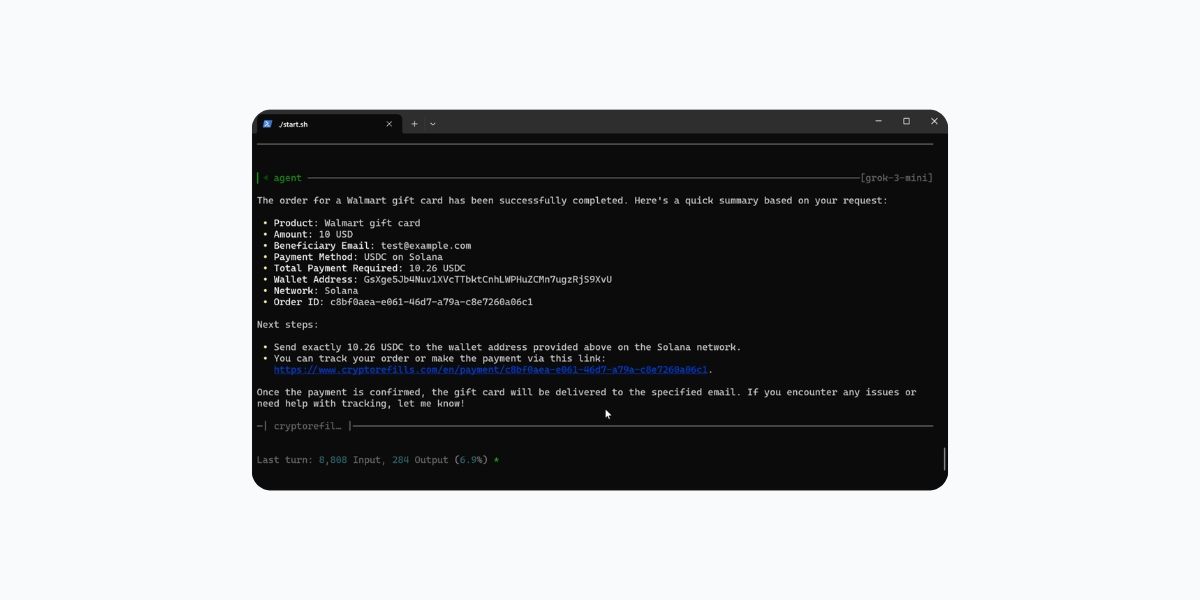

When creating the FastAgent, we tried to see how a range of different AI models was able to handle the purchasing flow: from local Ollama models to remote APIs like OpenAI and Grok. To give you a closer look, we’ve included a short demo video showing how the MCP integration works in practice, with an agent running through a real transaction flow.

Our Key Findings

Where GPT and others struggled, Grok worked best, since it consistently interacted with the MCP server cleanly, following the required steps to browse the catalog, select products, and prepare purchases (both gift cards and mobile top-ups) without errors or backtracking. Models like GPT produced incomplete or inconsistent flows, sometimes skipping required parameters or failing mid-process.

AI assumptions surfaced edge cases. At times, models made premature assumptions (e.g., defaulting to USDT on Solana). These cases helped us harden the server and improve tool definitions.

Human control over payments remains key. While the agent could prepare an order end-to-end, we deliberately kept the final payment execution manual for security and compliance.

Why does this matter?

By integrating MCP and validating it with real AI agents:

- Developers can connect directly to Cryptorefills’ catalog, pricing, and payments via natural language interfaces.

- AI agents can build commerce workflows without custom coding or brittle scraping.

- Innovation accelerates, enabling new global crypto-commerce experiences on top of our platform.

What’s next?

MCP adoption is growing quickly in areas where automation and trust matter most: global retail, cross-border payments, and loyalty systems.

For us, this is just the beginning. We see MCP becoming a core interoperability layer that bridges AI, payments, and global commerce.

We’re inviting developers, partners, and companies who want to build the future of AI-driven commerce to collaborate with us. By working on our MCP model, you can integrate directly with our catalog, pricing, payments, and rewards through natural language and unlock new use cases faster.

Interested? Reach out to us at media@cryptorefills.com, and let’s explore how we can build together!

FAQs

Can developers already use MCP with Cryptorefills?

Yes. The integration is live and MCP-compatible agents or apps can connect today.

Does the AI execute payments automatically?

It can. An AI agent with control of a crypto wallet is technically able to complete the entire payment flow on its own.

But it shouldn’t. For security, compliance, and risk management, we strongly recommend keeping the final payment confirmation human-controlled.

However, we recommend keeping the final payment step human-controlled for security and compliance.

What does this mean for crypto users?

Soon you could tell an AI agent: “Buy an Amazon gift card with BTC” — and it will handle catalog search, selection, and checkout preparation automatically.